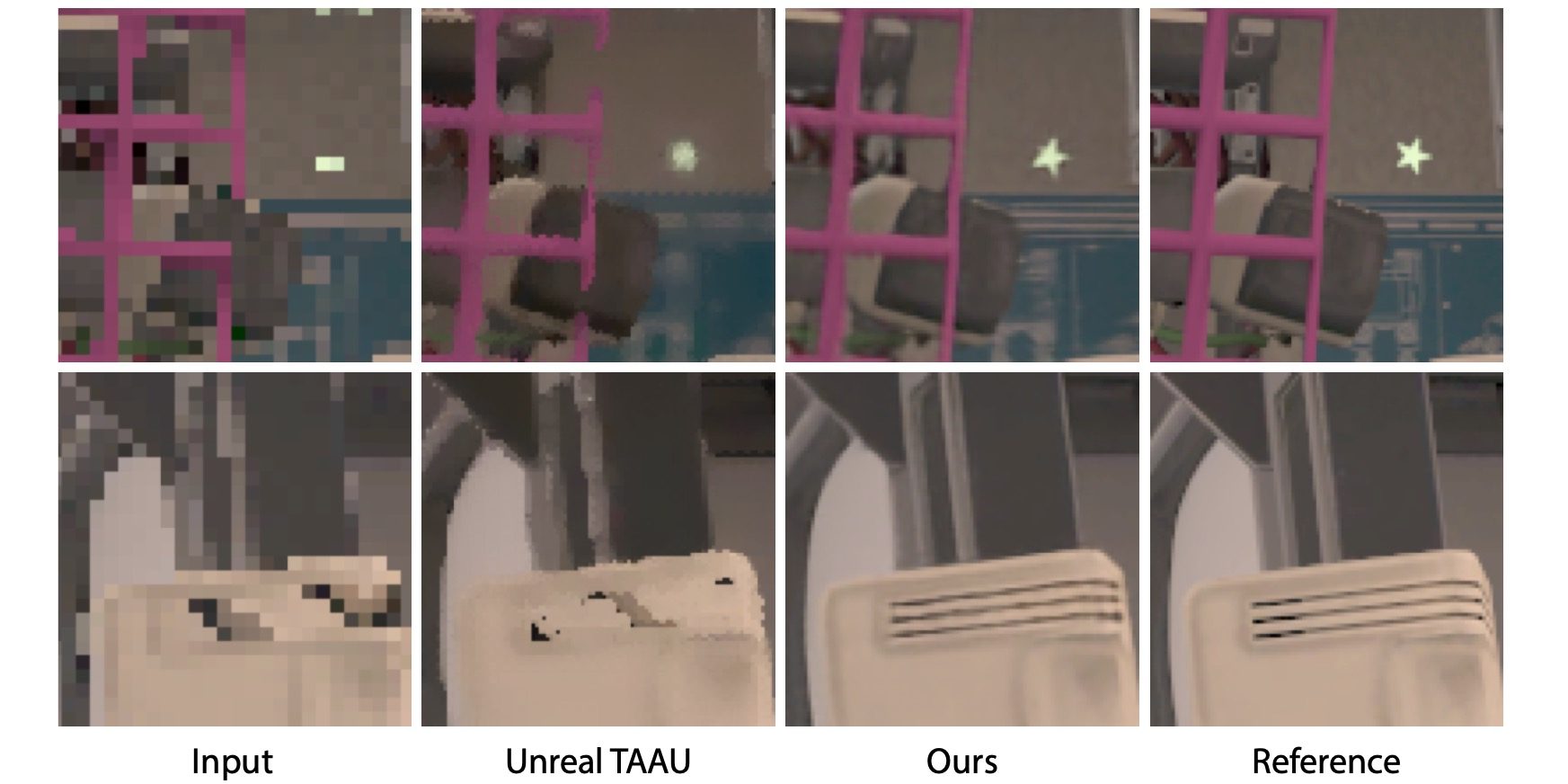

Rendering 3D graphics for the latest high-resolution displays has never been an easy task, and the challenge level increases multiple times for VR headsets with twin displays using high refresh rates — something Oculus’ parent company Facebook knows all too well. Today, Facebook researchers revealed a new technique for upsampling real-time-rendered 3D content, using machine learning to instantly transform low-resolution, computationally easier imagery into a very close approximation of much higher-resolution reference materials.

The easiest way to understand Facebook’s innovation is to imagine the Mona Lisa rendered as only 16 colored squares, such as a 4×4 grid. A human looking at the grid would see an unforgivably jaggy, boxy image, perhaps recognizing the Mona Lisa’s famous outlines, but a trained computer could instantly identify the grid and replace it with the original piece of art. Employing three-layer convolutional neural networks, Facebook’s researchers have developed a technique that works not just for flat images but rather for 3D rendered scenes, transforming “highly aliased input” into “high fidelity and temporally stable results in real-time,” taking color, depth, and temporal motion vectors into account.

Unlock premium content and VIP community perks with GB M A X!

Join now to enjoy our free and premium membership perks.

![]()

![]()