Last year, Australian company Depth Analysis took performance-driven animation to the next level with MotionScan—a technology that utilizes 32 high-definition cameras to capture an actor’s performance without the need for a special ping-pong-ball suit—in Rockstar Games’ L.A. Noire. Now, U.K.-based startup Speech Graphics wants to revolutionize audio-driven animation through new software that uniquely predicts how the muscles of the human face move to produce sounds.

Last year, Australian company Depth Analysis took performance-driven animation to the next level with MotionScan—a technology that utilizes 32 high-definition cameras to capture an actor’s performance without the need for a special ping-pong-ball suit—in Rockstar Games’ L.A. Noire. Now, U.K.-based startup Speech Graphics wants to revolutionize audio-driven animation through new software that uniquely predicts how the muscles of the human face move to produce sounds.

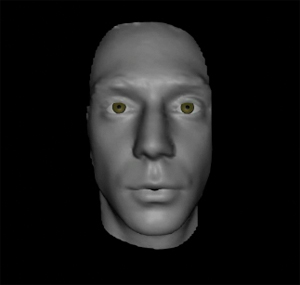

The new lip synching technology uses just audio input to automatically make a 3D character speak. Since it uses a universal physics model, the company says it will work across all languages, saving game development time and resources.

An award-winning team from the University of Edinburgh’s School of Informatics and the Centre for Speech Technology Research, Speech Graphics co-founders Michael Berger and Dr. Gregor Hofer have a combined 20 years of experience in speech technology and animation. They say they created their new software in response to the rising quantity and cost of spoken dialogue in today’s story-driven video games, such as BioWare’s recently released massively multiplayer online role-playing game Star Wars: The Old Republic (SW:TOR). SW:TOR, which contains 260,000 lines of voiced dialogue in three languages, reportedly cost nearly $200 million to develop.

“With our technology, we’re aiming for robust high quality, even better than what you can achieve with motion capture. And because it’s audio-driven, it’s at a price point that makes it scalable to huge amounts of speech,” said Berger.

Berger says capturing the fluidity and accuracy in natural speech is one of the most difficult things to do convincingly in facial animation. “People are very sensitive to this when you get it wrong because we are all focused on faces,” he said.

While the company’s lip synch service has not been used in any released games, Dr. Hofer told VentureBeat through email that a motion capture studio and an unnamed triple-A developer have both integrated the technology into their production pipelines.

Speech Graphics will showcase its new technology in seven languages (English, German, French, Spanish, Japanese, Korean, and Russian) at the Game Developers Conference in San Francisco, Calif. next month.

[vimeo http://www.vimeo.com/36496897 w=600&h=360]