AstroBeam, a new virtual reality game studio, unveiled its debut title, Stellar Cafe, which is a showcase for real-time voice conversations between the player and AI.

Created by former Owlchemy Labs cofounder and CEO Devin Reimer, Stellar Cafe is the first VR game built entirely around real-time AI-powered conversation, using large language models to enable unscripted, dynamic interactions by interpreting voice input and generating voice output in real-time.

It’s an innovative new comedy game from the team that, while at Owlchemy Labs, made titles like Vacation Simulator and Job Simulator.

AstroBeam also announced that they have licensed Owlchemy Labs’ hand-tracking technology, enabling players to navigate their way through Stellar Cafe using only their hands and voice. Stellar Cafe launches later this year for Meta Quest, and it’s debuting on the VR Showcase today.

Watch the trailer for Stellar Cafe here.

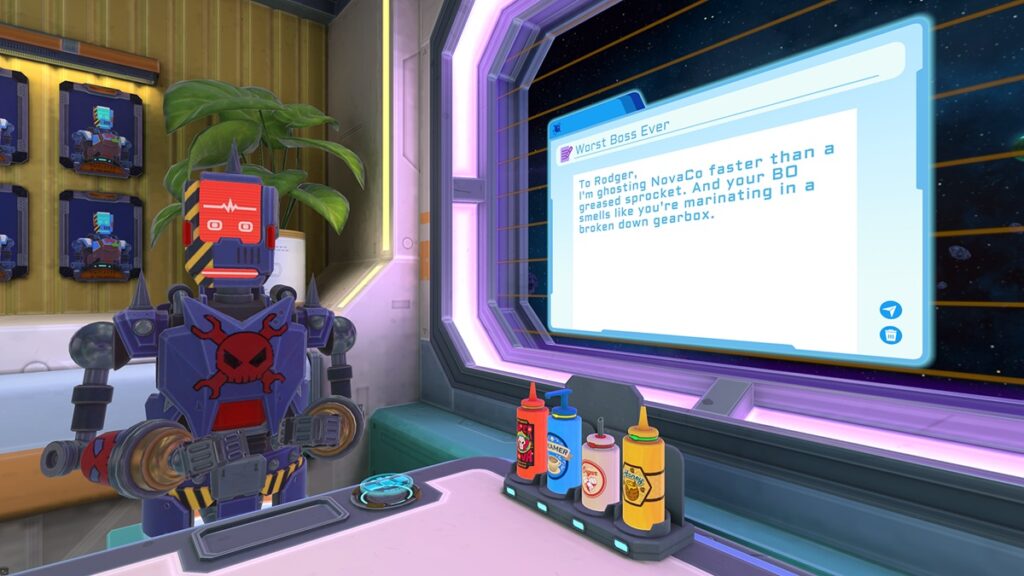

Stellar Cafe invites you to step into a vibrant, quirky, café among the stars where the coffee is hot and the robots have stories to tell. It’s up to you to uncover their stories, personalities, and help solve their problems.

But these robots aren’t stereotypical NPCs with scripted dialogue. The patrons of Stellar Cafe remember what you say, respond with understanding and context, and reveal themselves through conversations that evolve as you get to know them.

“Stellar Cafe is our first step towards big possibilities for how we tell stories and interact in VR using voice input,” said Devin Reimer, CEO and founder of AstroBeam. “By combining handcrafted characters with real-time AI conversation, we’re creating interactions that feel natural and real. We’re not using AI to replace creativity, but we’re using it to enable a new form of interactivity through voice.”

Playing the game

The Stella Cafe is a zany place run by robots. You can ask a robot with your voice that you want a smoothie (see the video) with ketchup, mustard and relish. And the robot replies in an unscripted way, “One hot dog smoothie coming right up.”

You might not notice from just watching the video itself, but those robots are responding using their own AI intelligence. It’s a sign that AIs are finally living up to the notion that they can replace NPCs with dynamic and intelligent responses.

At the cafe, you arrive as a human. James, the robot barista, has this problem where there’s a big party he’s trying to throw in a few days, but none of the robots have RSVP’d.

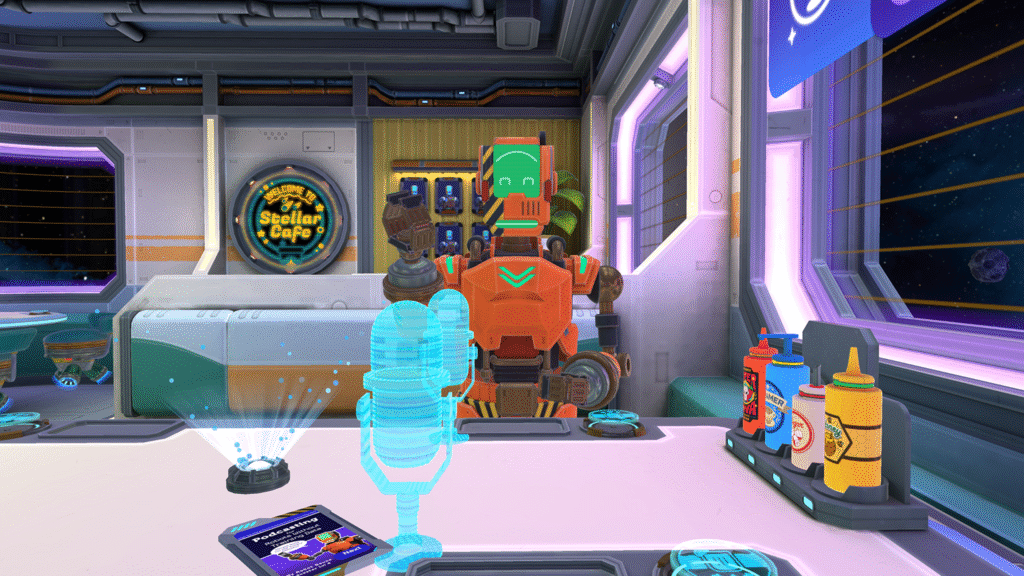

The robot Cindy, as an example, is super into podcasting but she can’t go to the party because she needs to solve a problem so that her sponsor doesn’t drop her. You keep coming back to the cafe, and there is a new set of robts every day.

Here’s what you can expect in Stellar Cafe:

- A Quirky Cast of Characters That Need Your Help: Get to know a variety of robots across the cafe and chat with them to uncover problems that they need help solving, whether it’s helping them quit their job or becoming their first human podcast guest.

- Completely Controller-Free Interactions: Navigate the café like a true interstellar local with no controllers or menus required, thanks to voice input and Owlchemy Labs’ signature hand-tracking technology.

- An Out-of-this-world Cup of Joe: Feeling thirsty? Thanks to AI-powered conversation, you can chat up the barista and place all kinds of cosmic drink orders, whether you’re craving a Meteor Mocha, an Astro Algae Smoothie, or inventing your own galactic concoction on the fly.

Origins

AstroBeatm started 2.5 years ago and it has a team of six people, plus some contractors. The company raised a seed round of $3 million at the end of 2023. The team had to build and experiment on how to make a game that was voice-driven.

AstroBeam was founded by Reimer, cofounder and former CEO/CTO of Owlchemy Labs – the creators of Job Simulator and Vacation Simulator. AstroBeam is focused on creating incredibly natural and highly interactive ways to interact with games and NPCs using your voice.

Reimer said that the team has been working for over two years to figure out what it would mean to make games where voice was the true input.

“Up to now, there have been a few games that have used voice. There’s games where maybe you say a particular word and maybe detect that one word and then perform an action,” Reimer said.

He said the team considered what it would build if it was fundamentally about the player just naturally speaking, instead of having to kind of figure out particular things to say.

Reimer said, “We been working really hard on building up server-side infrastructure to handle this really complicated task of being able to kind of ingest what you’re speaking, converting that over to text, running that through our dynamic prompts and our LLMss to get a response, and then bringing that back over into actions and also speech, moving from text back to speech, all within this really real-time sense, right?”

Reimer added, “We’re trying to get it to the point that it actually felt like a conversation and a back and forth with these characters. And so then we had that part, and then the other part is, ‘kind of, ‘how do we incorporate the things that we wanted from a game — how do we kind of have some progress? How do we have actions? How do we have interactivity in the world itself? And so that’s been a huge challenge.”

The LLM has to have the intelligence and processing power and speed to deal with words, emotions, actions, what to do next and any change in goals.

“All of that stuff has been incredibly challenging, but it’s one of those things that, particularly within VR, like, you start feeling this sense of presence with these robots, in just a way that I’ve never experienced before,” Reimer said.

This is a tough tech problem, as AI large language models take a lot of processing power in data centers to do what they can do, recognizing natural language, in real time. It is possible to do some of the processing at the “edge” devices like phones or PCs. But it’s not generally capable enough to do a lot of that processing in real time.

So Reimer’s team had to “build out pretty complex server-side tech to handle all the steps of converting text into speech running at really quality, high speed, LLMs, and then converting it back in a way that we just couldn’t do on devices themselves.”

“That’s too much for today’s PCs to do. And for mobile, and then for VR, that’s just untenable,” he said.

The tech will eventually get there through proposed technologies such as the AI PC, which promises to do a lot more processing at the edge device. But it’s not there yet because of the need for low latency, or short response times. In this case, the game had to run on Meta Quest 2 and Meta Quest 3 devices, which are standalone VR devices.

One of the things that helps is that there are multiple microphones on the latest Quest VR devices, and that helps pick up sounds with good quality. The sense of presence with VR makes you feel like you’re sitting across from an NPC that is talking to you.

“In the real-time conversation side, we need these responses in hundreds of milliseconds to make sure that we have something that feels like a natural conversation. So I’m confident it will get there at some point,” Reimer said. “But I also think there’s going to be this push and pull where, as the technology gets better on the device side of things, the quality of LLMs we want to run are going to go up. And so it’s going to be like chasing these things for a while. And so that’s why we became pretty convinced that, like building out a good server-side architecture was going to be the right call for some time.”

Some of the ideas went back years. But then all of a sudden, there started to be a whole bunch of things happening at the same time in machine learning that started allowing the firm to kpick out better quality of words, better generation stuff in the text to speech, stuff better stuff in the reasoning, which is like such a huge, Reimer said.

Reimer said he has always tried to cross tech barriers while designing fun games. Job Simulator was a case in point, where it was a comedy game set in a spatial computing VR space.

“It allowed us to go and test all of these things in an environment where people were more receptive to trying out these different types of things. Some worked, some didn’t work, but it allowed us to pave the way there. I saw the same opportunity over here, where it definitely was a long shot if we’re going to be able to pull this off. But I’m super happy that I took that path because it’s starting to come together,” Reimer said.

The game sessions last around 30 minutes to 40 minutes so far, and while that seems long, it’s a long amount of time in a medium where sometimes people don’t last long. In a surprise to me, Reimder said there are no scripted lines. All the dialogue is dynamic.

The game is focused on a 13+ age group, and so it has had to spend a lot of time building guard rails while being off the cuff in personality at the same time. The team has to figure out what kind of commentary is age-appropriate. Reimer said his team has been learning things and is eager to share some of the lessons about how they thought through the challenging problems and got to the point where they could announce the game.

“It’s quite a journey. I’m super excited to get people to see this. One of the things that has been so awesome through this is we’ve been doing playtesting across people,” Reimer said.