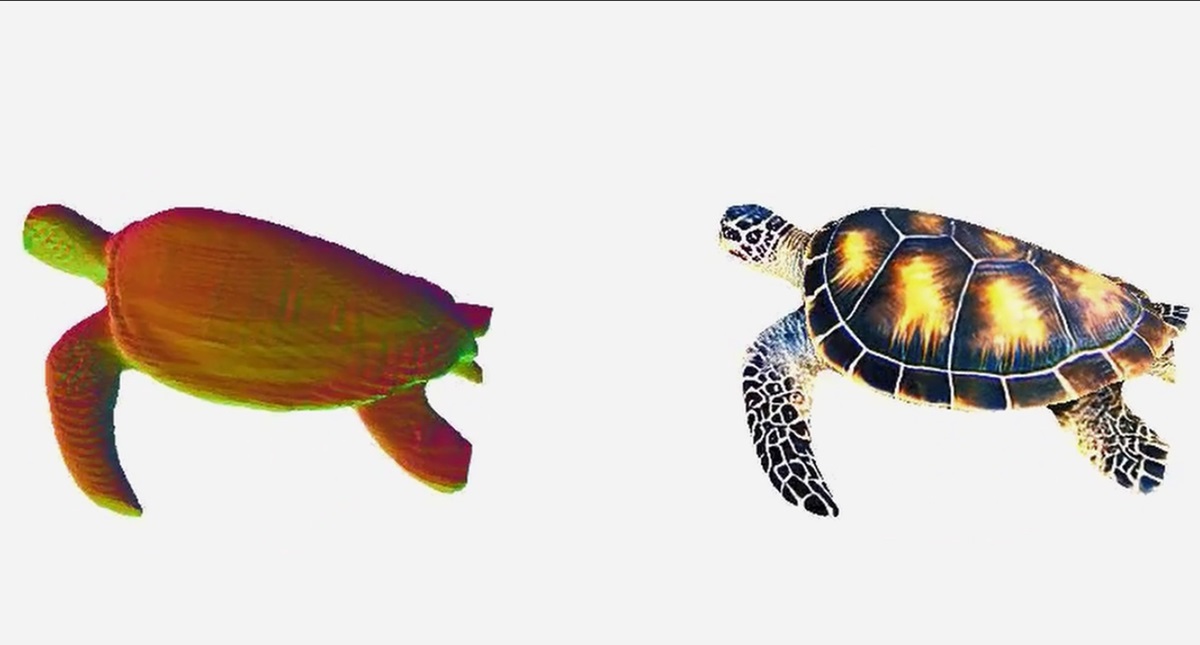

Nvidia unveiled Latte3D to use generative AI to instantly generate 3D shapes from text. The text-to-3D generative AI generative AI model can produce high-quality 3D shapes in milliseconds.

Crafted by Nvidia’s AI lab team in Toronto, Latte3D represents a significant advancement in the field of artificial intelligence, offering near-real-time generation of 3D objects and animals from simple text prompts, Nvidia said.

Unlock premium content and VIP community perks with GB M A X!

Join now to enjoy our free and premium membership perks.

![]()

![]()